Agentic Context Engineering(ACE)

If traditional prompting is like writing a static cheat sheet before an exam, Agentic Context Engineering(ACE) is like maintaining a Github repository for prompts – one that evolves, branches, and merges over time, Instead of freezing a model’s context in place, ACE treats it as a living playbook that grows smarter with every experience.

Think of context here not as a paragraph of instructions, but as a dynamic memory system – a continuously evolving notebook of strategies, mistakes, and best practices. Every time the model moves (or fails) a task, AE updates the playbook with new “commits” – preserving what worked, refining what didn’t and merging new insights seamlessly.

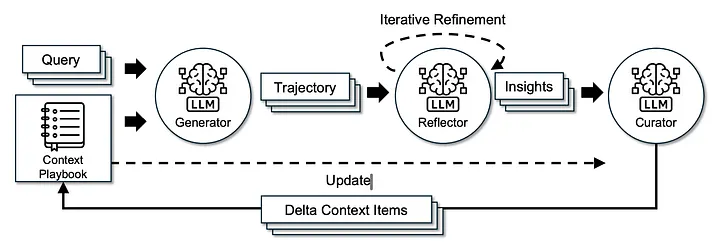

At its heart, ACE operates through a three-step agentic workflow – a self-improving feedback loop inspired by how humans learn.

Generator → Reflector → Curator → Updated Context → Next Task

ACE transforms trial and error into a self-improvement loop. It’s a glimpse into the next generation of AI systems — ones that refine themselves continuously, just like developers iterating on production code.

more reading: Context Engineering for AI Agents [Research Paper Explained] Self-Improving Language Models

Think of how humans grow. We don’t retrain our brains every night. We remember, reflect, and reorganize. Our progress comes not from rewriting ourselves, but from refining what we know. ACE brings that same principle to machines — teaching models to evolve through context, not computation.