Concurrency and parallelism

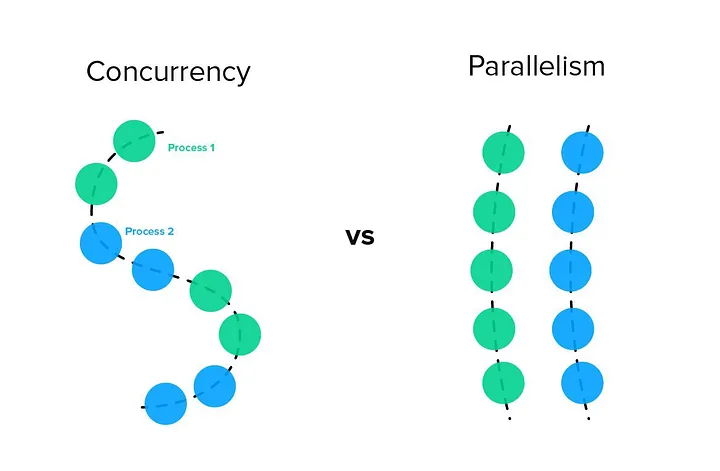

We need to clarify an important point. Concurrency and parallelism are two concepts that are often confused, leading to misunderstandings.

Parallel means that two or more tasks are executed at the same time. This is possible only if the CPU supports it, requiring multiple cores to achieve parallelism. However, modern CPUs are typically multi-core, and single-core CPUs are largely outdated and no longer widely used, as they are significantly outperformed by multi-core ones. This shift is because modern applications are designed to utilize multiple cores, often needing to perform several tasks simultaneously.

Concurrency means that tasks are managed at the same time. For example, JavaScript is a single-threaded language where all concurrent tasks are managed by a single thread. JavaScript uses async/await to facilitate concurrency.

From the OS perspective, the CPU has to handle the threads of multiple processes. The number of threads is always higher than the number of cores, which means the CPU must perform context switches. Briefly explained, every thread has a priority and can either be idle, working, or waiting for CPU cycles. The CPU has to go through all threads that are not idle and distribute its limited resources based on priority. It also must ensure that all threads with the same priority get a fair amount of CPU time; otherwise, some applications might freeze. Every time a core is assigned to a different thread, the currently running thread has to be paused, and its register state preserved. Additionally, the CPU has to track whether any idle threads have become active. As you can see, this is a complex and resource-intensive operation, and as developers, we should aim to minimize the number of threads we use. Ideally, the thread count should be close to the number of CPU cores, which helps reduce context switching overhead.